An example from Convex Optimization page 650

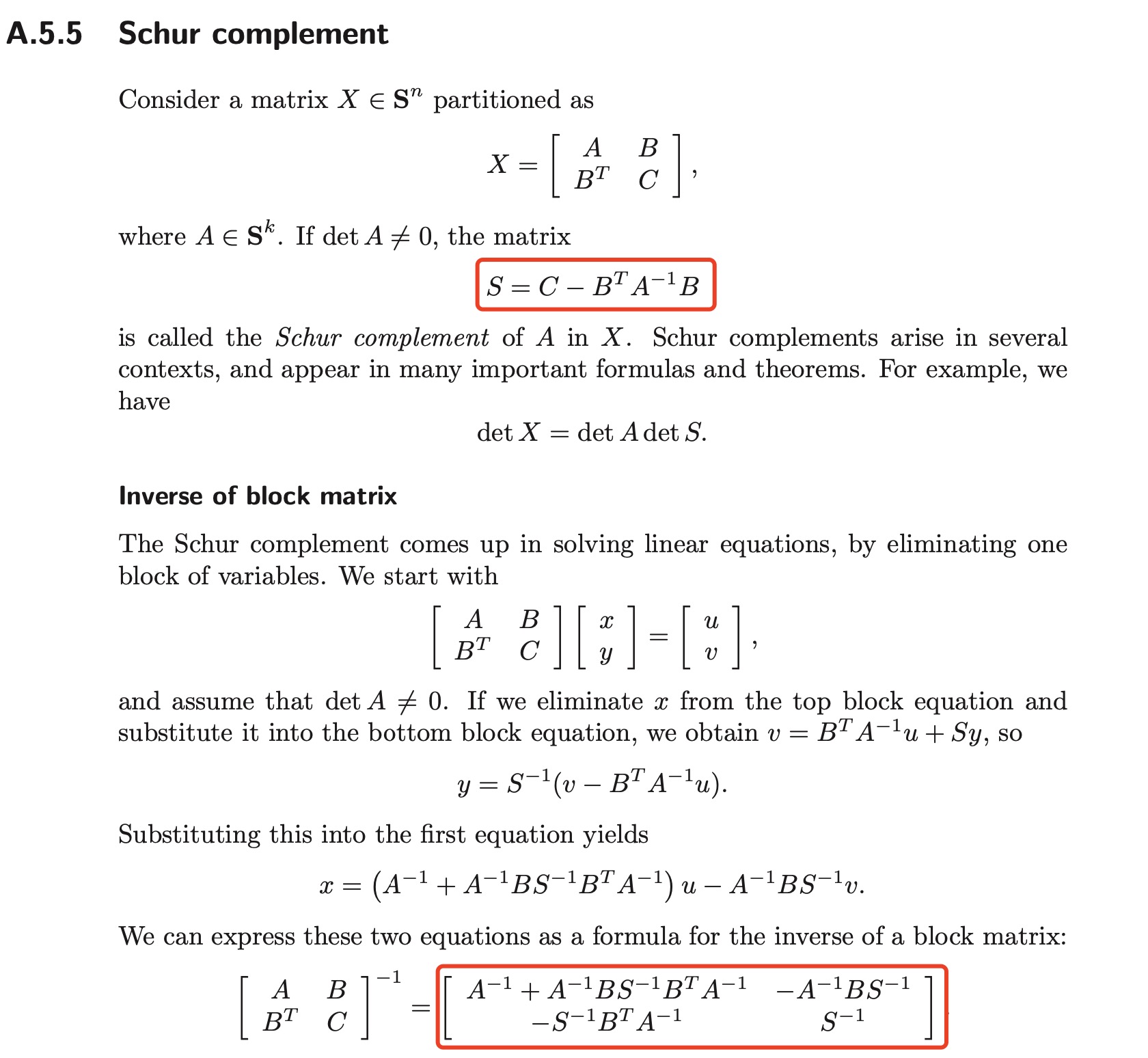

The original equation:

I❤️LA implementation:

given

A ∈ ℝ^(k×k)

B ∈ ℝ^(k×m)

C ∈ ℝ^(m×m)

S = C - BᵀA⁻¹B

[A⁻¹+A⁻¹BS⁻¹BᵀA⁻¹ -A⁻¹BS⁻¹

-S⁻¹BᵀA⁻¹ S⁻¹]I❤️LA compiled to C++/Eigen:

/*

given

A ∈ ℝ^(k×k)

B ∈ ℝ^(k×m)

C ∈ ℝ^(m×m)

S = C - BᵀA⁻¹B

[A⁻¹+A⁻¹BS⁻¹BᵀA⁻¹ -A⁻¹BS⁻¹

-S⁻¹BᵀA⁻¹ S⁻¹]

*/

#include <Eigen/Core>

#include <Eigen/Dense>

#include <Eigen/Sparse>

#include <iostream>

#include <set>

struct convex_optimization_650ResultType {

Eigen::MatrixXd S;

Eigen::MatrixXd ret;

convex_optimization_650ResultType(const Eigen::MatrixXd & S,

const Eigen::MatrixXd & ret)

: S(S),

ret(ret)

{}

};

convex_optimization_650ResultType convex_optimization_650(

const Eigen::MatrixXd & A,

const Eigen::MatrixXd & B,

const Eigen::MatrixXd & C)

{

const long k = A.cols();

const long m = B.cols();

assert( A.rows() == k );

assert( B.rows() == k );

assert( C.rows() == m );

assert( C.cols() == m );

Eigen::MatrixXd S = C - B.transpose() * A.colPivHouseholderQr().solve(B);

Eigen::MatrixXd ret_0(k+m, k+m);

ret_0 << A.inverse() + A.colPivHouseholderQr().solve(B) * S.colPivHouseholderQr().solve(B.transpose()) * A.inverse(), -A.colPivHouseholderQr().solve(B) * S.inverse(),

-S.colPivHouseholderQr().solve(B.transpose()) * A.inverse(), S.inverse();

Eigen::MatrixXd ret = ret_0;

return convex_optimization_650ResultType(S, ret);

}

void generateRandomData(Eigen::MatrixXd & A,

Eigen::MatrixXd & B,

Eigen::MatrixXd & C)

{

const int k = rand()%10;

const int m = rand()%10;

A = Eigen::MatrixXd::Random(k, k);

B = Eigen::MatrixXd::Random(k, m);

C = Eigen::MatrixXd::Random(m, m);

}

int main(int argc, char *argv[])

{

srand((int)time(NULL));

Eigen::MatrixXd A;

Eigen::MatrixXd B;

Eigen::MatrixXd C;

generateRandomData(A, B, C);

convex_optimization_650ResultType func_value = convex_optimization_650(A, B, C);

std::cout<<"return value:\n"<<func_value.ret<<std::endl;

return 0;

}I❤️LA compiled to Python/NumPy/SciPy:

"""

given

A ∈ ℝ^(k×k)

B ∈ ℝ^(k×m)

C ∈ ℝ^(m×m)

S = C - BᵀA⁻¹B

[A⁻¹+A⁻¹BS⁻¹BᵀA⁻¹ -A⁻¹BS⁻¹

-S⁻¹BᵀA⁻¹ S⁻¹]

"""

import numpy as np

import scipy

import scipy.linalg

from scipy import sparse

from scipy.integrate import quad

from scipy.optimize import minimize

class convex_optimization_650ResultType:

def __init__( self, S, ret):

self.S = S

self.ret = ret

def convex_optimization_650(A, B, C):

A = np.asarray(A, dtype=np.float64)

B = np.asarray(B, dtype=np.float64)

C = np.asarray(C, dtype=np.float64)

k = A.shape[1]

m = B.shape[1]

assert A.shape == (k, k)

assert B.shape == (k, m)

assert C.shape == (m, m)

S = C - B.T @ np.linalg.solve(A, B)

ret_0 = np.block([[np.linalg.inv(A) + np.linalg.solve(A, B) @ np.linalg.solve(S, B.T) @ np.linalg.inv(A), -np.linalg.solve(A, B) @ np.linalg.inv(S)], [-np.linalg.solve(S, B.T) @ np.linalg.inv(A), np.linalg.inv(S)]])

ret = ret_0

return convex_optimization_650ResultType(S, ret)

def generateRandomData():

k = np.random.randint(10)

m = np.random.randint(10)

A = np.random.randn(k, k)

B = np.random.randn(k, m)

C = np.random.randn(m, m)

return A, B, C

if __name__ == '__main__':

A, B, C = generateRandomData()

print("A:", A)

print("B:", B)

print("C:", C)

func_value = convex_optimization_650(A, B, C)

print("return value: ", func_value.ret)I❤️LA compiled to MATLAB:

function output = convex_optimization_650(A, B, C)

% output = convex_optimization_650(A, B, C)

%

% given

% A ∈ ℝ^(k×k)

% B ∈ ℝ^(k×m)

% C ∈ ℝ^(m×m)

%

% S = C - BᵀA⁻¹B

% [A⁻¹+A⁻¹BS⁻¹BᵀA⁻¹ -A⁻¹BS⁻¹

% -S⁻¹BᵀA⁻¹ S⁻¹]

if nargin==0

warning('generating random input data');

[A, B, C] = generateRandomData();

end

function [A, B, C] = generateRandomData()

k = randi(10);

m = randi(10);

A = randn(k, k);

B = randn(k, m);

C = randn(m, m);

end

k = size(A, 2);

m = size(B, 2);

assert( isequal(size(A), [k, k]) );

assert( isequal(size(B), [k, m]) );

assert( isequal(size(C), [m, m]) );

S = C - B' * (A\B);

ret_0 = [[inv(A) + (A\B) * (S\B') * inv(A), -(A\B) * inv(S)]; [-(S\B') * inv(A), inv(S)]];

ret = ret_0;

output.S = S;

output.ret = ret;

end

I❤️LA compiled to LaTeX:

\documentclass[12pt]{article}

\usepackage{mathdots}

\usepackage[bb=boondox]{mathalfa}

\usepackage{mathtools}

\usepackage{amssymb}

\usepackage{libertine}

\DeclareMathOperator*{\argmax}{arg\,max}

\DeclareMathOperator*{\argmin}{arg\,min}

\usepackage[paperheight=8in,paperwidth=4in,margin=.3in,heightrounded]{geometry}

\let\originalleft\left

\let\originalright\right

\renewcommand{\left}{\mathopen{}\mathclose\bgroup\originalleft}

\renewcommand{\right}{\aftergroup\egroup\originalright}

\begin{document}

\begin{center}

\resizebox{\textwidth}{!}

{

\begin{minipage}[c]{\textwidth}

\begin{align*}

\intertext{given}

\mathit{A} & \in \mathbb{R}^{ \mathit{k} \times \mathit{k} } \\

\mathit{B} & \in \mathbb{R}^{ \mathit{k} \times \mathit{m} } \\

\mathit{C} & \in \mathbb{R}^{ \mathit{m} \times \mathit{m} } \\

\\

\mathit{S} & = \mathit{C} - {\mathit{B}}^T\mathit{A}^{-1}\mathit{B} \\

\omit \span \begin{bmatrix}

\mathit{A}^{-1} + \mathit{A}^{-1}\mathit{B}\mathit{S}^{-1}{\mathit{B}}^T\mathit{A}^{-1} & -\mathit{A}^{-1}\mathit{B}\mathit{S}^{-1}\\

-\mathit{S}^{-1}{\mathit{B}}^T\mathit{A}^{-1} & \mathit{S}^{-1}\\

\end{bmatrix} \\

\end{align*}

\end{minipage}

}

\end{center}

\end{document}

I❤️LA LaTeX output: